Acoustic signal processing is a growing area of focus in healthcare and biomedicine. The characteristics of acoustic signals, especially that of abnormal breath sounds, provide clinicians with valuable information on respiratory diseases [

1]. Traditionally, clinicians use stethoscopes as an indispensable tool for healthcare delivery for their reliability and efficiency in aiding the investigation of bodily sounds. However, the interpretation of breath sounds heard through a stethoscope is user dependent, and its single examination mode of measurement disallows continuous monitoring of daily symptoms and fluctuations [

2]. With the progression of sensing technology development, wearable devices are now available as promising solutions. They facilitate automatic and continuous acoustic analysis and assist clinicians in evaluating the effectiveness of a prescribed intervention [

3].

Amongst the various breath sounds, wheeze is of particular interest as its presence and duration are a significant reference for clinicians to diagnose and monitor various pulmonary pathologies, including chronic obstructive pulmonary disease (COPD), bronchiolitis, and asthma [

4]. In addition to wheezing, cough is another common respiratory sound and significant symptom of interest. Its pattern and changes over time can indicate disease evolution in more than 100 different diseases, and its resolution reflects the effectiveness of therapy [

5].

The biological features of cough, wheeze, and breath sounds can be characterized by both the spectral and temporal analysis [

6,

7]. For example, the wheezing sounds during respiration occur when the airway is obstructed or narrowed; hence, the fundamental frequency of the respiratory sound was of a higher frequency when compared with a normal breath. Furthermore, wheeze is often perceived as musical tone where the harmonics can be observed in the spectral domain. Coughs, although having similar properties as wheezes, such as having a higher fundamental frequency and harmonics, are explosive in nature, whereas wheezes are continuous and more commonly found in the expiration phase rather than the entire respiratory cycle. Given these biological characteristics of wheeze and cough sounds, various classification algorithms were developed to differentiate wheeze and cough signals using their spectral and temporal features. Applying hidden Markov models on the extracted cepstral features from a database of 2155 cough events, Matos et al. [

8] achieved 82% detection accuracy in the binary classification of cough and non-cough sound samples. Aydore et al. [

9] investigated 246 wheeze and non-wheeze epochs using Fisher’s ratio and Neyman Pearson hypothesis and achieved 93.5% sensitivity. Jain et al. [

10] extracted spectral features from 19 wheeze samples and 21 other lung sounds and applied a multi-stage fixed threshold classifier for 85% overall accuracy. Lin et al. [

11] developed a bilateral filter-based edge-processing technique for 90 wheeze samples and detected 87 out of them. Time-series deep learning models were also examined in previous studies. For example, Justice Amoh [

12] used the convolutional neural network and recurrent neural network for cough detection among 14 subjects and achieved 82.5% and 79.9% overall accuracy, respectively. Arati Gurung [

13] reviewed 12 recent studies of automatic abnormal lung sound detection, and the average accuracy was 85%. However, all the above studies focused on the detection of a single symptom; the robustness of such methods is not addressed if applied to multiple concurrent symptoms, a feature of ‘real-world’ clinical practice. To the best of the authors’ knowledge, the only study that explored the overall detection of multiple concurrent symptoms including cough and wheeze was performed by Himanshu S. Markandeya [

14]. This system employed a two-stage classification structure and achieved 90% accuracy from 74 recordings. It identified discrete wavelet transform (DWT) coefficients for symptoms and applied a specific processing method for each identified DWT coefficient to determine symptoms. This classification algorithm, however, assumes that the DWT coefficient of wheeze sounds are resolved as D5, but another study [

15] illustrated that wheeze data exhibits useful information throughout D6 to D2. Therefore, using exclusive processing methods for specific DWT coefficients would be unsuitable when the actual symptom is not from its expected category.

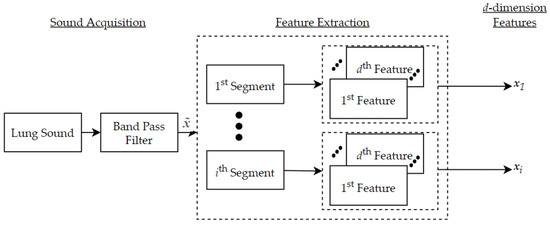

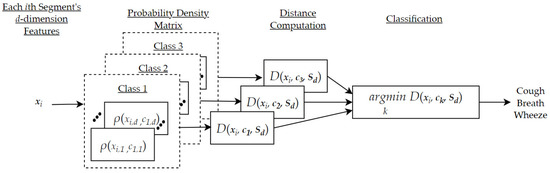

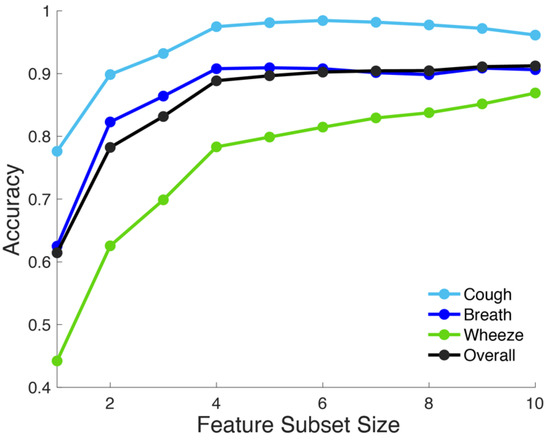

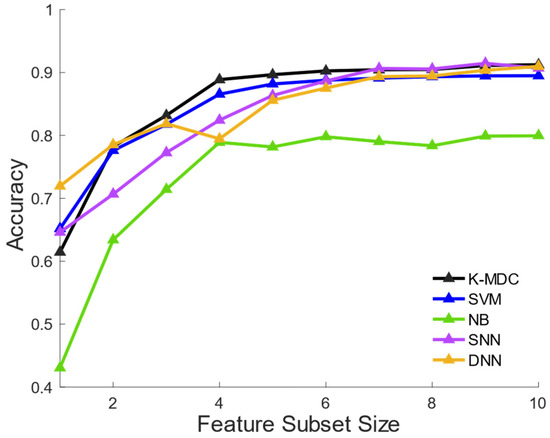

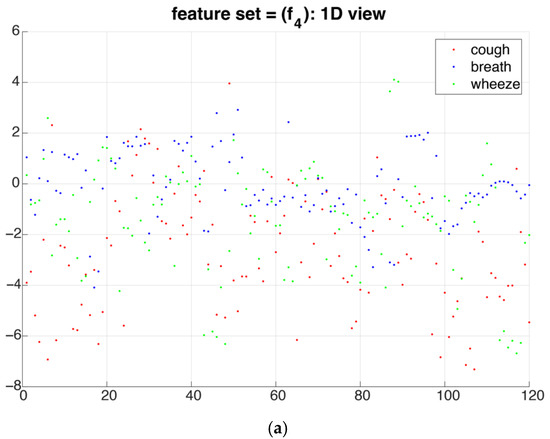

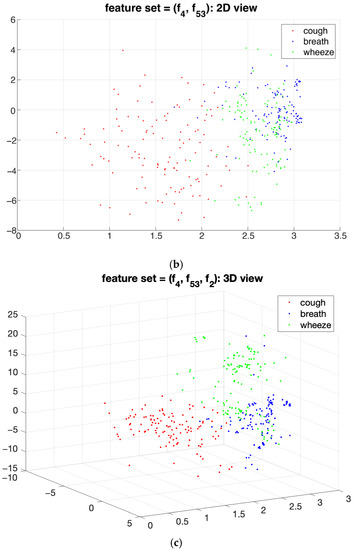

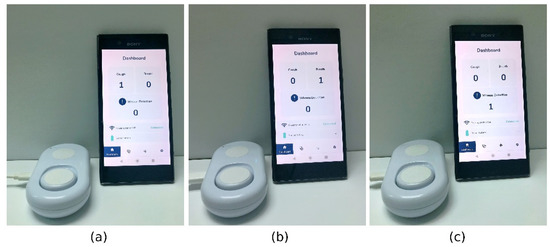

This study addresses the challenges of existing cough or wheeze detection studies, attempting to find an optimal acoustic signal processing method for implementation in a wearable device. The proposed method includes a kernel-like input mapping strategy by first transforming the original sound signals in time series to a higher dimensional feature space that characterizes the temporal and spectral patterns of respiratory sounds, followed by a tailored dimension reduction for the algorithm to achieve robustness and generalizability by distilling the prediction information from only a few features. Since data processing is one of the main consumers of battery power in wearable devices [

17], the superior prediction performance and minimized feature extraction in the proposed method would make such devices more suitable in clinical applications.

This paper is organized as follows.

Section 2 describes the collected dataset, the proposed data-processing methods, and its unique features. It also includes a short discussion on its implementation on an embedded system. In

Section 3, the results from the proposed data processing methods are presented and analyzed. Finally, the findings are discussed in

Section 4.

Yuriy Ilkovych -

December 17, 2025 -

Pulmonology -

49 views -

0 Comments -

0 Likes -

0 Reviews

Yuriy Ilkovych -

December 17, 2025 -

Pulmonology -

49 views -

0 Comments -

0 Likes -

0 Reviews